NEW INVARIANT TO NONLINEAR SCALING

QUASI-NEWTON ALGORITHMS

QUASI-NEWTON ALGORITHMS

Abstract

New Quasi-Newton methods for unconstrained optimization are proposed which are invariant to a nonlinear scaling of a strictly convex quadratic function. In specific, we examine a logarithmic scaling of some quadratic function and proceed to derive the necessary parameters for obtaining invariancy to such nonlinear scalings. The techniques considered in this work have the same convergence properties as the classical BFGS-method, when applied to a quadratic function.Citation details of the article

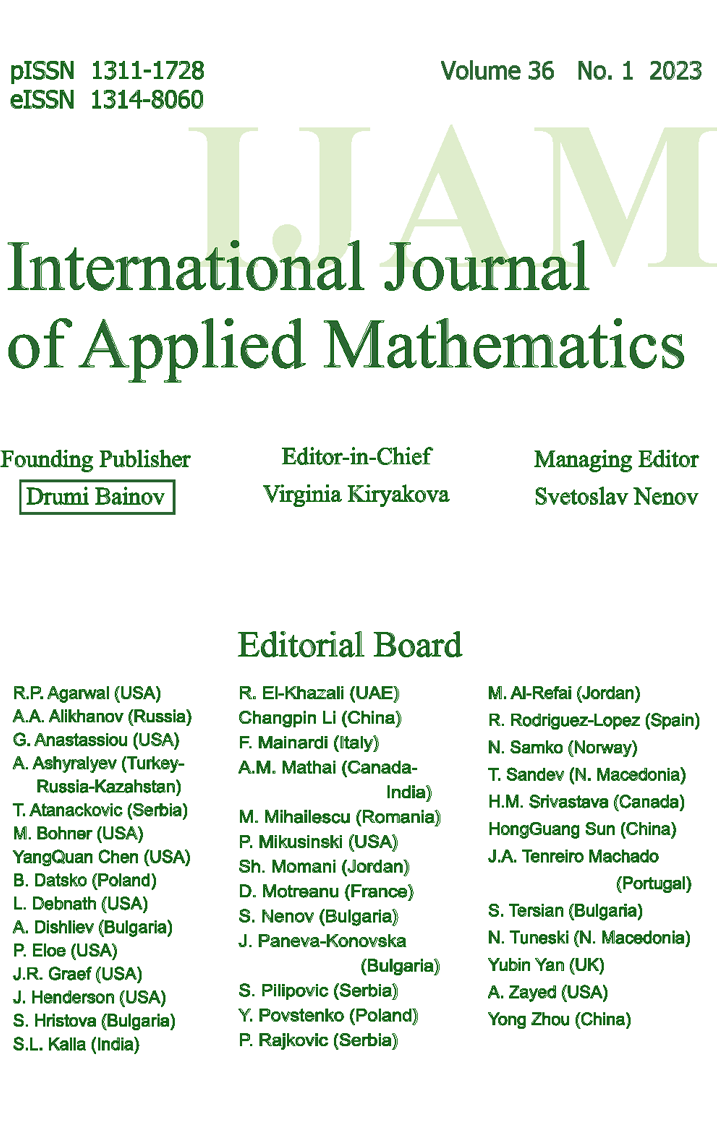

Journal: International Journal of Applied Mathematics Journal ISSN (Print): ISSN 1311-1728

Journal ISSN (Electronic): ISSN 1314-8060

Volume: 34 Issue: 3 Year: 2021 DOI: 10.12732/ijam.v34i3.12

Download Section

Download the full text of article from here.

You will need Adobe Acrobat reader. For more information and free download of the reader, please follow this link.

References

- [1] M. Al-Baali, New property and global convergence of the Fletcher-Reeves method with inexact line searches, IMA J. Numer. Anal., 5 (1985), 122- 124.

- [2] W.R. Boland, E.R. Kamgnie, J.S. Kowalik, A conjugate gradiant optimization method invariant to nonlinear scaling, JOTA, 27 (1979), 11-19.

- [3] N. Anderi, A scaled BFGS preconditioned conjugate gradient algorithm for unconstrained optimization, Applied Mathematics Letters, 20 (2007), 645-650.

- [4] C.G. Broyden, The convergence of a class of double-rank minimization algorithms - Part 2: The new algorithm, J. Inst. Math. Applic., 6 (1970), 222-231.

- [5] R.H. Byrd, R.B. Schnabel, G.A. Shultz, Parallel quasi-Newton methods for unconstrained optimization, Math. Programing, 42 (1988), 273-306.

- [6] Y.H. Dai, Y. Yuan, A nonlinear conjugate gradient method with a strong global convergence property, SIAM J. Optim., 10 (1999), 177-182.

- [7] J.E. Dennis, R.B. Schnabel, Least change secant updates for quasi-Newton methods, SIAM Review, 21 (1979), 443-459.

- [8] R. Fletcher, Practical Methods of Optimization (Second Edition), Wiley, New York (1987).

- [9] R. Fletcher, A new approach to variable metric algorithms, Comput. J., 13 (1970), 317-322.

- [10] I.N. Fried, N-step conjugate gradient minimization scheme for nonquadratic functions, AIAA J, 9 (1971), 149-154.

- [11] J.A. Ford, I.A.R. Moghrabi, Using function-values in multi-step quasiNewton methods, J. Comput. Appl. Math., 66 (1996), 201-211.

- [12] W. Hager, H.C. Zhang, A new conjugate gradient method with guaranteed descent and an efficient line search, SIAM J. Optim., 16 (2005), 170-192.

- [13] H.Y. Huang, Uninfied approach to quadratically convergent algorithms for function minimization, J. Optim. Theory Appl., 5 (1970), 405-423.

- [14] I.A.R. Moghrabi, Numerical experience with multiple update quasi-newton methods for unconstrained optimization, Journal of Mathematical Modeling and Algorithms, 6 (2007), 231-238.

- [15] I.A.R. Moghrabi, Implicit extra-update multi-step quasi-Newton methods, Int. J. Operational Research, 28 (2017), 69-81.

- [16] I.A.R. Moghrabi, New two-step conjugate gradient method for unconstrained optimization, International Journal of Applied Mathematics, 33, No 5 (2020), 853-866; doi: 10.12732/ijam.v33i5.8.

- [17] J.J. Mor´e, B.S. Garbow, K.E. Hillstrom, Testing unconstrained optimization software, ACM Trans. Math. Softw., 7 (1981), 17-41.

- [18] M.J.D. Powell, Restart procedures for the conjugate gradient method, Math. Program, 12 (1977), 241-254.

- [19] D. Salane, R.P. Tewarson, On symmetric minimum norm updates, IMA Journal of Numerical Analysis, 9 (1983), 235-240.

- [20] D.F. Shanno, Conditioning of quasi-Newton methods for function minimization, Math. Comp., 24 (1970), 647-656.

- [21] D.F. Shanno, K.H. Phua, Matrix conditioning and nonlinear optimization, Math. Programming, 14 (1978), 149-160.

- [22] E. A Spedicato, Variable metric method for function minimization derived from invariancy to nonlinear scaling, JOTA, 20 (1976), 30-42.

- [23] A. Tassopoulos, C.A. Story, Conjugate direction method based on a nonquadratic model, JOTA, 43 (1984), 1-9.

- [24] P. Wolfe, Convergence conditions for ascent methods II: Some corrections, SIAM Rev., 13 (1971), 185-188.

- [25] L. Wong, M. Cao, F. Xing, The new spectral conjugate gradient method for large-scale unconstrained optimisation, J. Inequal Appl., 111 (2020), 28-37.