SEPARATION OF TWO MUSICAL INSTRUMENTS

USING MATRIX FACTORISATION TECHNIQUES

USING MATRIX FACTORISATION TECHNIQUES

Abstract

Source separation is important in audio processing. This research focuses on musical instrument source separation. The methods used are Interpolative Decomposition (ID), Nonnegative Matrix Factorisation (NMF) and Convolutive Matrix Factorisation (CNMF). These three matrix factorisations are simple algorithms used for extracting features for image processing. The performances of NMF, CNMF and ID are compared when applying them to musical instrument source separation. Signal-to-noise ratio, Similarity Index and Residual Energy are used to measure the performance of each method. Numerically, Nonnegative Matrix Factorisation with Kullback Leibler divergence is found to have performed better. However, in theory, the Itakura-Saito divergence variant of NMF and CNMF is recommended for solving music-related source separation.Citation details of the article

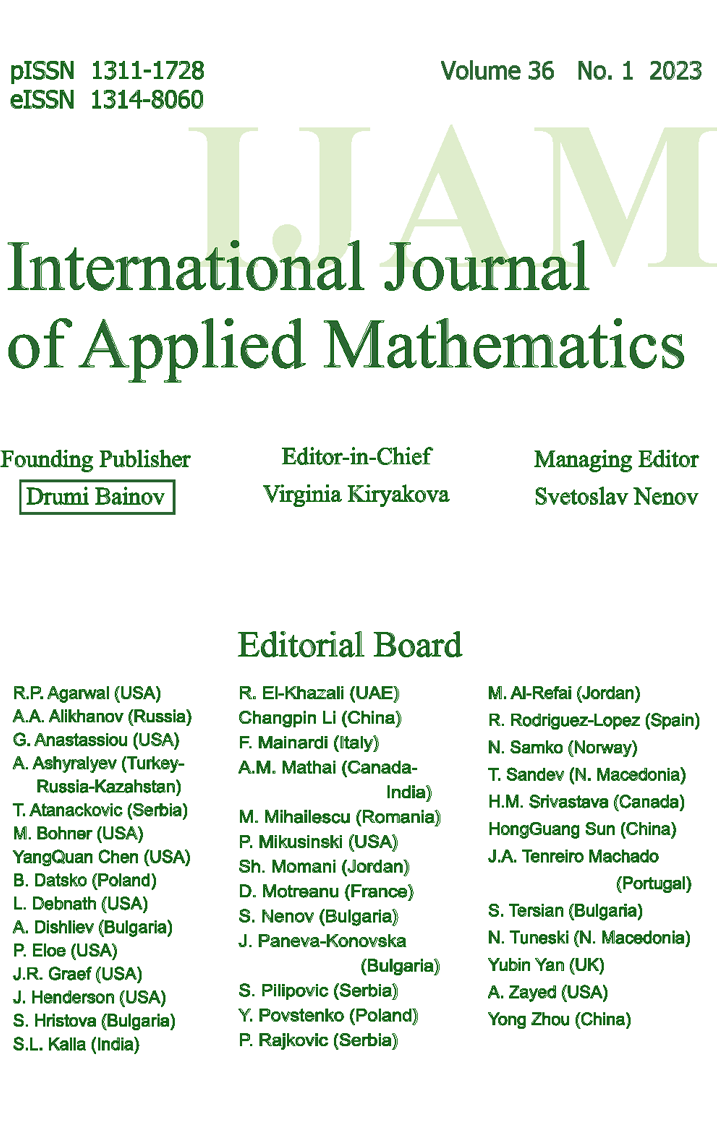

Journal: International Journal of Applied Mathematics Journal ISSN (Print): ISSN 1311-1728

Journal ISSN (Electronic): ISSN 1314-8060

Volume: 36 Issue: 3 Year: 2023 DOI: 10.12732/ijam.v36i3.8

Download Section

Download the full text of article from here.

You will need Adobe Acrobat reader. For more information and free download of the reader, please follow this link.

References

- [1] A. Jansson, E. Humphrey, N. Montecchio, R. Bittner, A. Kumar, and T. Weyde, Singing voice separation with deep U-Net convolutional networks, In: ISMIR, Suzhou, China (2017).

- [2] A. Ozerov and C. Févotte, Multichannel nonnegative matrix factorization in convolutive mixtures for audio source separation, IEEE Trans. Audio, Speech, Language Process., 18 (2010), 550-563.

- [3] B. McFee et al., Librosa: Audio and music signal analysis in Python, In: Proc. of the 14th Python in Science Conf., Austin, Texas (2015), 18-24.

- [4] D. L. Sun and C. Févotte, Alternating direction method of multipliers for non-negative matrix factorization with the beta-divergence, In: ICASSP, Florence, Italy (2014), 6201-6205.

- [5] D. Stoller, S. Ewert, and S. Dixon, Wave-U-Net: A multi-scale neural network for end-to-end audio source separation, In: ISMIR, Paris, France (2018), 334-340.

- [6] D.D. Lee and H.S. Seung, Algorithms for non-negative matrix factorization, In: NuerIPS, Denver, Colorado (2000).

- [7] F. Yanez and F. Bach, Primal-dual algorithms for non-negative matrix factorization with the Kullback-Leibler divergence, In: ICASSP, New Orleans, Louisiana (2017), 2257-2261.

- [8] G. Huang, Z. Liu, L. Van Der Maaten, and K. Q. Weinberger, Densely connected convolutional networks, In: CVPR, Honolulu, Hawaii (2017), 2261-2269.

- [9] GitHub. https://github.com/stevetjoa/musicinformationretrie val.com/tree/gh-pages/audio.

- [10] H. Kameoka, Nobutaka Ono, Kunio Kashino, and Shigeki Sagayama, Complex NMF: A new sparse representation for acoustic signals, In: ICASSP, Taipei, Taiwan (2009), 3437 - 3440.

- [11] H. Sawada, H. Kameoka, S. Araki, and N. Ueda, New formulations and efficient algorithms for multichannel NMF, In: WASPAA, New Paltz, New York (2011), 153-156.

- [12] H. Sawada, H. Kameoka, S. Araki, and N. Ueda, Efficient algorithms for multichannel extensions of Itakura-Saito nonnegative matrix factorization, In: ICASSP, Kyoto Japan (2012), 261-264.

- [13] H. Sawada, H. Kameoka, S. Araki, and N. Ueda, Multichannel Extensions of non-negative matrix factorization with complex-valued data, IEEE Trans. Audio, Speech, Language Process., 21 (2013), 971-982.

- [14] J. Blauert, Spatial Hearing, The MIT Press, Cambridge (1996).

- [15] K. Yoshii, R. Tomioka, D. Mochihashi, and M. Goto, Beyond NMF: Timedomain audio source separation without phase reconstruction, In: ISMIR, Curitiba, Brazil (2013).

- [16] Michael Jackson - Beat It.mid — Free MIDI, BitMidi. https://bitmidi.com/michael-jackson-beat-it-mid.

- [17] N. Detlefsen et al., TorchMetrics - Measuring Reproducibility in PyTorch, J. Open Source Softw, 7 (2022), 4101.

- [18] N. Takahashi and Y. Mitsufuji, Multi-Scale multi-band densenets for audio source separation, In: WASPAA, New Paltz, New York (2017), 21-25.

- [19] P.D. O’Grady and B. A. Pearlmutter, Convolutive non-negative matrix factorisation with a sparseness constraint, In: MLSP, Maynooth, Ireland (2006), 427-432.

- [20] P. Paatero and U. Tapper, Positive matrix factorization: A non-negative factor model with optimal utilization of error estimates of data values, Environmetrics, 5 (1994), 111-126.

- [21] P. Smaragdis, Non-negative matrix factor deconvolution; extraction of multiple sound sources from monophonic inputs, In: ICA, Berlin, Heidelberg (2004), 494-499.

- [22] P. Smaragdis, Convolutive speech bases and their application to supervised speech separation, IEEE Trans Audio Speech Lang Process, 15 (2007), 112.

- [23] R. Advani and S. O’Hagan, Efficient algorithms for constructing an interpolative decomposition, arXiv:2105.07076 [cs, math] (2022).

- [24] R. M. Parry and I. Essa, Estimating the spatial position of spectral components in audio, In: ICA, South Caroline, USA (2006), 666-673.

- [25] Y. Luo and N. Mesgarani, Conv-TasNet: Surpassing ideal time-frequency magnitude masking for speech separation, IEEE/ACM Trans. Audio, Speech, Language Process., 27 (2019), 1256-1266.

- [26] Y.-X. Wang and Y.-J. Zhang, Nonnegative Matrix Factorization: A Comprehensive Review, IEEE Trans Knowl Data Eng, 25 (2013), 1336 - 1353.

- [27] Signal, signal.vercel.app. https://signal.vercel.app/edit.